Locks, Keys and the Limits of AI in Biology

How good is AI for biotech going to get? I don't know. But maybe if we understand what AI can't do, we'll get clearer on what it might do. Today, we're talking about theoretical limits on bio AI.

Transcript

In biology, we're not usually concerned with theoretical limits on our ability to understand the cell. We have our hands full dealing with the practical limits. I'm just out here trying to get good experimental data at a reasonable price. If there's a limit on how good that data can be, we're not close to it. We'll worry about it when we get there.

But theoretical limits do come up in science and they do impact what technologies we can develop. In physics, you've got limits like the Heisenberg Uncertainty Principle. You can't know the position and speed of a particle with perfect accuracy - no matter how good your experiments are.

In computer science, you have P≠NP. This is the theory, unproven but widely believed, that certain kinds of computing problems can't ever be solved quickly - no matter how clever your algorithms are.

It's a testament to the power of AI that it's probably worth thinking about if similar limits exist and what they might look like. What if you had tons of compute and tons of data to train on? What could you still not do?

It matters because even if AI tech moves quickly, like many of us think it will, it still can't do everything. The remaining problems become more important, in relative terms, as AI makes other things easier.

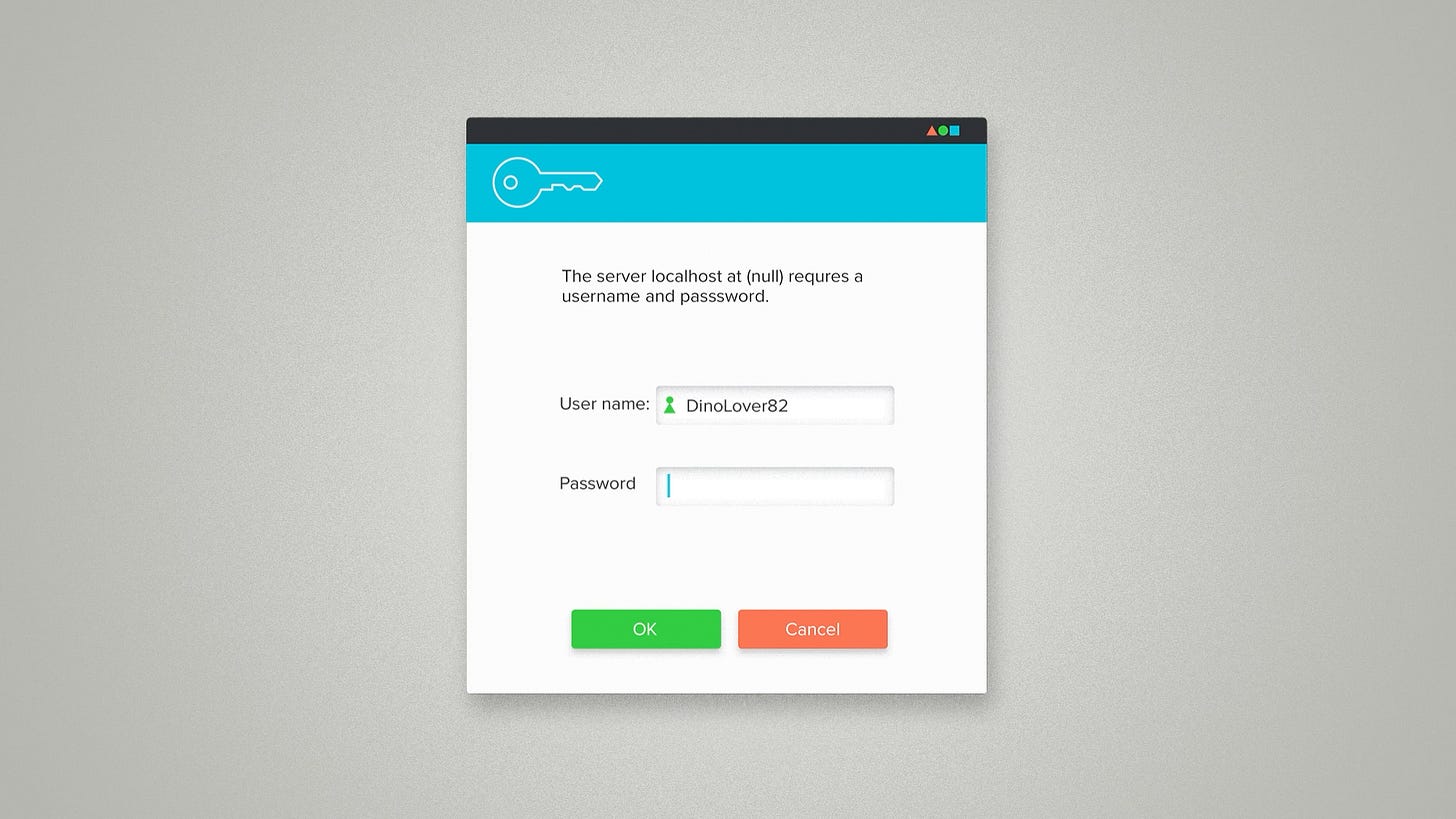

Here's an example of something AI can't do. It can't guess your password. I don't mean my favorite password, hunter2, I mean a proper, randomly generated password. Why not? Because good passwords are orthogonal, concurrent and context-free.

Orthogonal meaning the characters of the password are completely independent of one another. If I know the first character is an exclamation point, that doesn't help me to identify the other characters.

Concurrent, because you need to get every character right for the password to work. A password that is almost correct fails just as completely as one that is totally wrong.

And context-free because there's no external dataset that can help you. In spy movies, they find a post-it note on the monitor that says "the password is my dog's birthday." In the hard version of the password problem, there's no hints.

So what about biology? Are there problems in biology that look like guessing passwords? I think there probably are. In the way that I was trained, I would expect to find password-like problems everywhere.

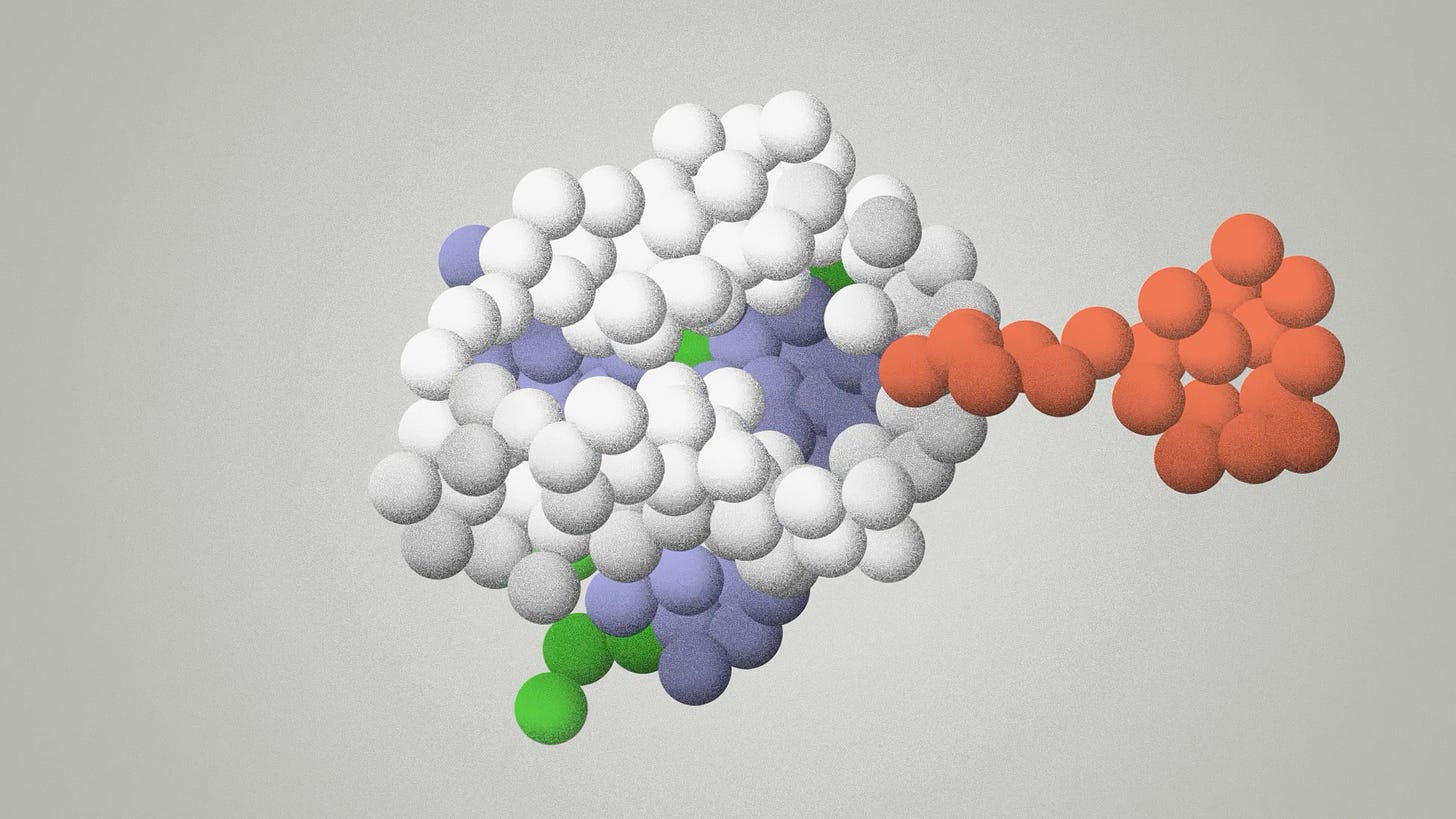

The lock-and-key model is probably the defining metaphor for all of molecular biology. We use it to think about almost every important biological function at the molecular level. It explains how an antibody finds its target, how an enzyme catalyzes a chemical reaction, how a transcription factor controls gene expression, how the kinases of a signaling cascade work together. Everything.

The basic idea is that two biological molecules are fundamentally two shapes and that the shapes have to fit together in order for the magic to happen.

If one protein has an outie poking piece, the other protein has to have an innie poking piece. A positive charge here has to line up a negative charge there. These 3 hydrogen bonds have to hit in exactly the right places. If they don't line up just right, the binding free energy will be too high and the interaction won't happen. No signaling pathway. No enzymatic reaction. No drug activity.

The lock-and-key metaphor is meant to evoke that this interaction has password-like specificity. Each tooth of the key has to match each pin of the lock, or the lock doesn't turn. If you take the analogy at face value, you'd infer that AI can't predict any biological function - they're all passwords.

But biology gives us some wiggle room. The parts of a protein, unlike a physical lock, are not fully orthogonal. They move together according to the rules of protein folding. So it may not be necessary to guess each feature of an interaction independently.

Unlike guessing a password, biological interactions don't have to be totally concurrent. Sometimes close is good enough. A drug with 5 out of 6 of the needed molecular interactions might still bind weakly and give us a measurable effect. An AI model can learn from that near miss and get closer next time.

And biology is never context-free. All the parts of a cell are evolving together. So there is always a chance that some other information exists that can guide our predictions. It's the equivalent of a post-it note on the monitor that says "this class of drug usually binds to a particular pocket shape." Biology is full of hints.

So where does that leave us? From where I sit, the most important problems in biology are going to be right at the limit of what we can achieve with AI. They're almost locks and keys. They're almost password problems.

But biology gives us a little bit of squishiness and context, so they're not not quite as hard as they theoretically could be. A good model will take you from infinite possible keys down to thousands of possible keys, but then you do have to try them in the real biological lock.

The winning R&D strategy looks like pushing AI to the limit, then recognizing the right time to jump from AI and get real lab data to take you beyond those limits.