Against Interpretability for Bio AI

Somebody smart once said that scientific theories should be made as simple as possible but not simpler. Today we're talking about why our models of biology should be as interpretable as possible but not interpretabler.

Transcript

These days it's common to hear the complaint that AI models are not interpretable - that they're just a black box. Millions of parameters with a complex architecture that produce amazing behaviors, but no human can understand why. There's a sense of helplessness in the face of all this complexity. It's as though we're working with something that is bigger than we are, something that has a life of its own.

And speaking as a biologist, I'd just like to say welcome to the god damn club. Because biology has been dealing with this since the Modern Synthesis. We're all about complex interaction networks with a life of their own. Systems with more parameters than any human can conceive? We eat those for breakfast.

Biological models have always been little islands of understanding in an ocean of complexity. So now, at this moment when many new biological models are also going to be AI models, it's worth checking in on what we, the biologists, actually want a model to do.

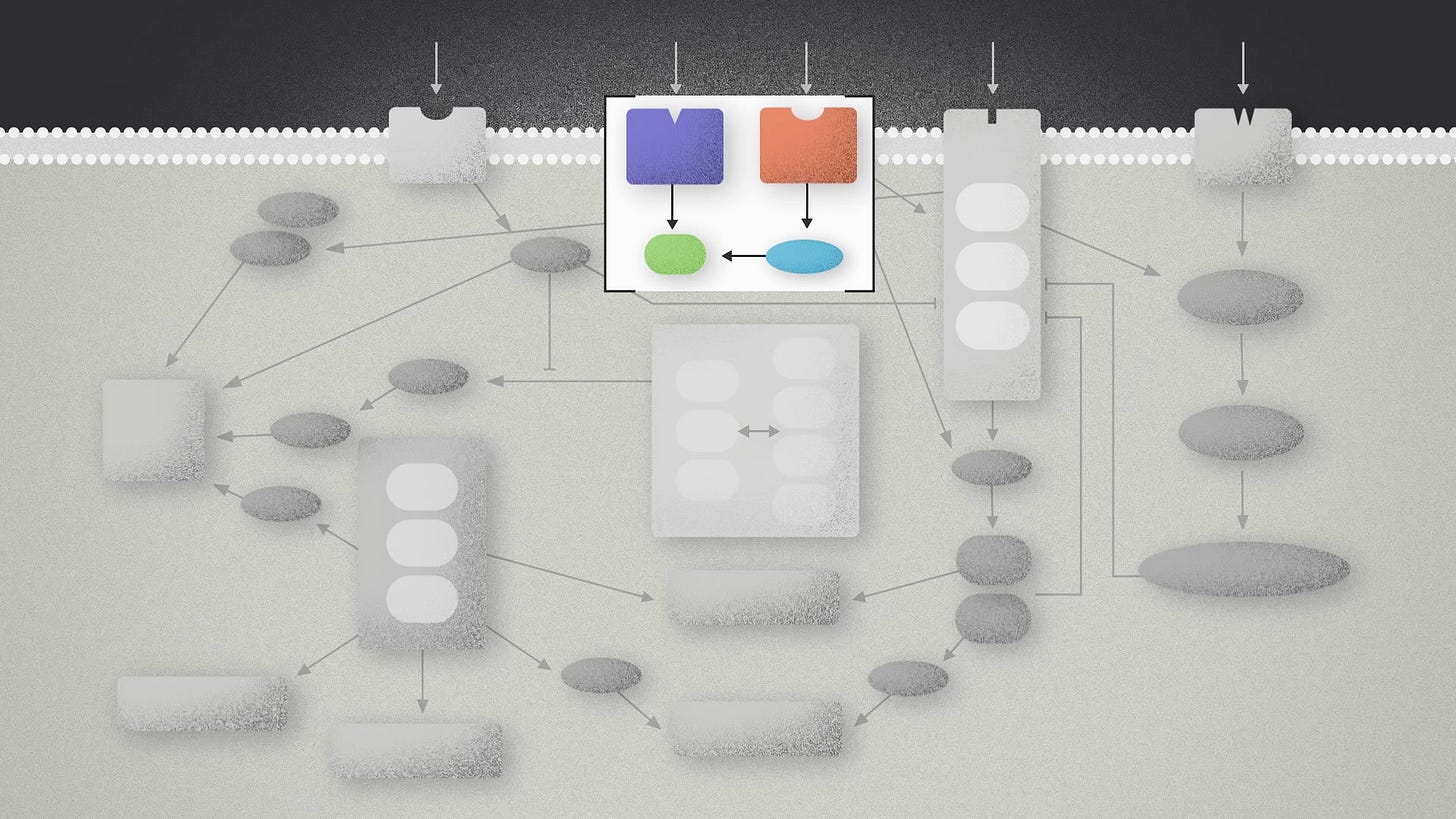

A model is interpretable to me when I understand it. Usually it isn't good practice to define a technical term as "I know it when I see it." But for interpretability, that's literally what it means. A specific scientist wants a model to generate predictions in a language that they personally find familiar. A genetic explanation means you know the gene that causes a particular phenotype. In molecular biology, you want to know the gene names and the collection of interactions between them. In systems biology, you want genes, interactions, and calculus expressions that describe those interactions more quantitatively.

One way to think about progress in biology is that these languages of explanation get richer and more diverse. Naturally, scientists prefer explanations that come from their own area of expertise: biophysics, biochemistry, evolution. We have nerd rivalries about whose models are better. But biologists are generally in agreement that our explanations only ever capture part of the story.

This is something that I love about biology. Universal laws of nature? We don't do that here. Biology is too diverse and too context-dependent to have laws in the way they do in physics or chemistry. Everything that looks like it might be a rule turns out to be 3 exceptions wearing a trenchcoat. That doesn't mean that biological models are futile, they're powerful. But it means that every biologist is aware, at some level, that too much interpretability can be a trap.

Let me give an example. Consider Notch, a signaling receptor that controls cell fate and cell proliferation. It's called Notch because it was discovered in fruit flies and they get little notches on their wings when the gene is mutated. In Nematodes, Notch regulates the germline stem cells. In humans, Notch affects the development of the brain, the heart, the immune system, depending on the other signals that are present. Notch is a tumor suppressor: loss of Notch function in some cells can lead to uncontrolled growth and certain cancers. But it is also an oncogene: too much Notch function in other cells can lead to other cancers.

Is Notch signaling exceptionally complicated? Not really. All of this stuff is normal to biologists, who are used to the idea that a gene can do different things in different contexts: humans versus nematodes, brain versus liver, blood cancer versus bladder cancer.

But it begs the question: what does an interpretable model of Notch function really look like? When I said earlier that "Notch is a signaling receptor that controls cell fate" - that's a sentence that a human can understand. But how much of the truth is really captured by that?

Why are cancer biologists even using the name "Notch" at all? A name assigned almost 100 years ago from a completely different functional context in a completely different organism? It isn't wrong to give a protein a name and focus on its role in a particular process. But that interpretability comes at a cost. It spotlights a main character and puts the rest of the story in the background.

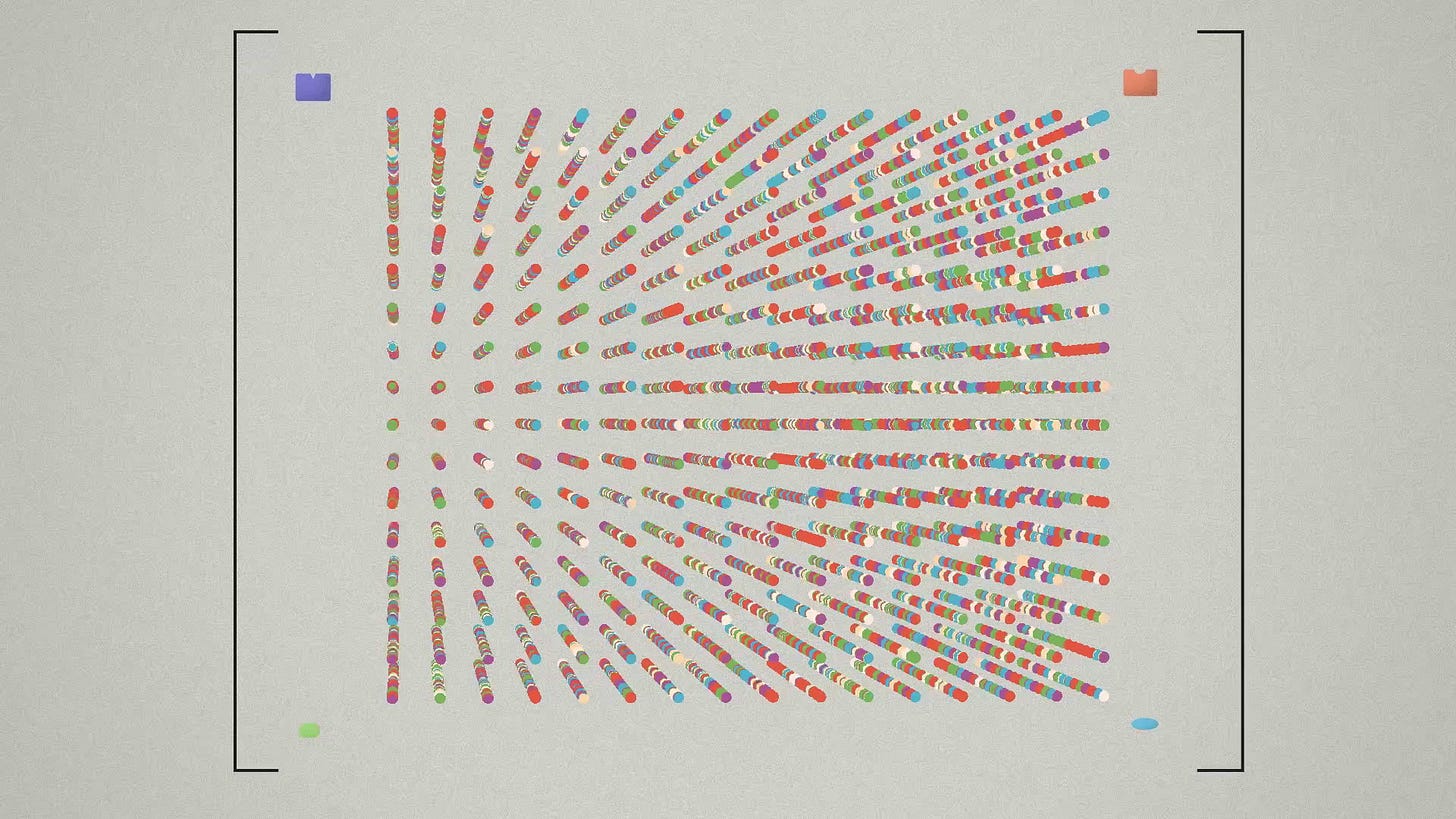

AI models are the opposite of this. They're the complement of this. Context is their native language. A classical model in biology wants to have just a few moving pieces with elegant descriptions of how they interact. An AI model has a huge number of parameters and stupidly simple linear interactions.

Instead of making strong choices about what parts of a system are really important, AI models just kind of summarize the data that we give them. This is also complementary to classical biological theories. When we read in a textbook about how Notch is a transmembrane receptor that binds to Delta and activates genes like cyclin D1 and NF-κB, the data that lead to the discovery of those interactions is left behind.

An AI model carries all of its training data with it. All the strengths and weaknesses are written in the model parameters. So to understand what a model is good at, or not good at, you need to familiarize yourself with the data that trained it. In my experience, scientists who do that are much more comfortable working with an AI model and much less likely to complain that its output is not interpretable. Understanding the data behind an AI model is comparable to understanding the theory behind a conventional model.

Look - I like an interpretable model as much as the next scientist. Give me a system of differential equations that explain how a cell behaves and I'm in nerd heaven. But I also know that biology, as a whole, is only partially captured by this kind of model. So let's not rush to demand that AI is interpretable on those terms.

Genetics and molecular biology give names to genes and make them the main character. AI models make data the main character. If I know anything about the scope of biological complexity, I know there's room to share the stage.

I get all of these arguments, but the issue for me is not really "Biology is complicated too!" but that model interpretability / introspectability can be an indispensable aid to validation. I can look at a linear regression model and if its coefficients are wack compared to my science headcanon, that gives me important information about the usefulness of that model. This comes directly into your statement "Understanding the data behind an AI model is comparable to understanding the theory behind a conventional model." Exactly- but there is no step where I can go see whether the model passes the smell test!

I wouldn't argue that therefore there is no usefulness for AI models in biology (I have used them happily! And also less happily), but rather that AI models have a significantly higher burden of proof for their usefulness and applicability.

My pitiful human focalization, which you rightfully denigrate, indeed makes a mockery of the glory of biology. But I can at least evaluate other commensurable mockeries.

With a low-interpretability model, I've made my life much worse! Now I have to understand _two_ irreducibly complex systems- one of which doesn't even have any relevance to my biological question.

That's not to say that there isn't an impressive array of statistical tools for doing the evaluation I'm asking for. And I'd also never claim that there was a viable alternative for AI in many applications.

Just: remember that at the end of the day a human still has to make sense of it all. Or nothing we are doing matters at all.